In a previous article we discussed five things that make for a great website experience. This time we’re exploring a sixth! Optimum website performance made possible through caching.

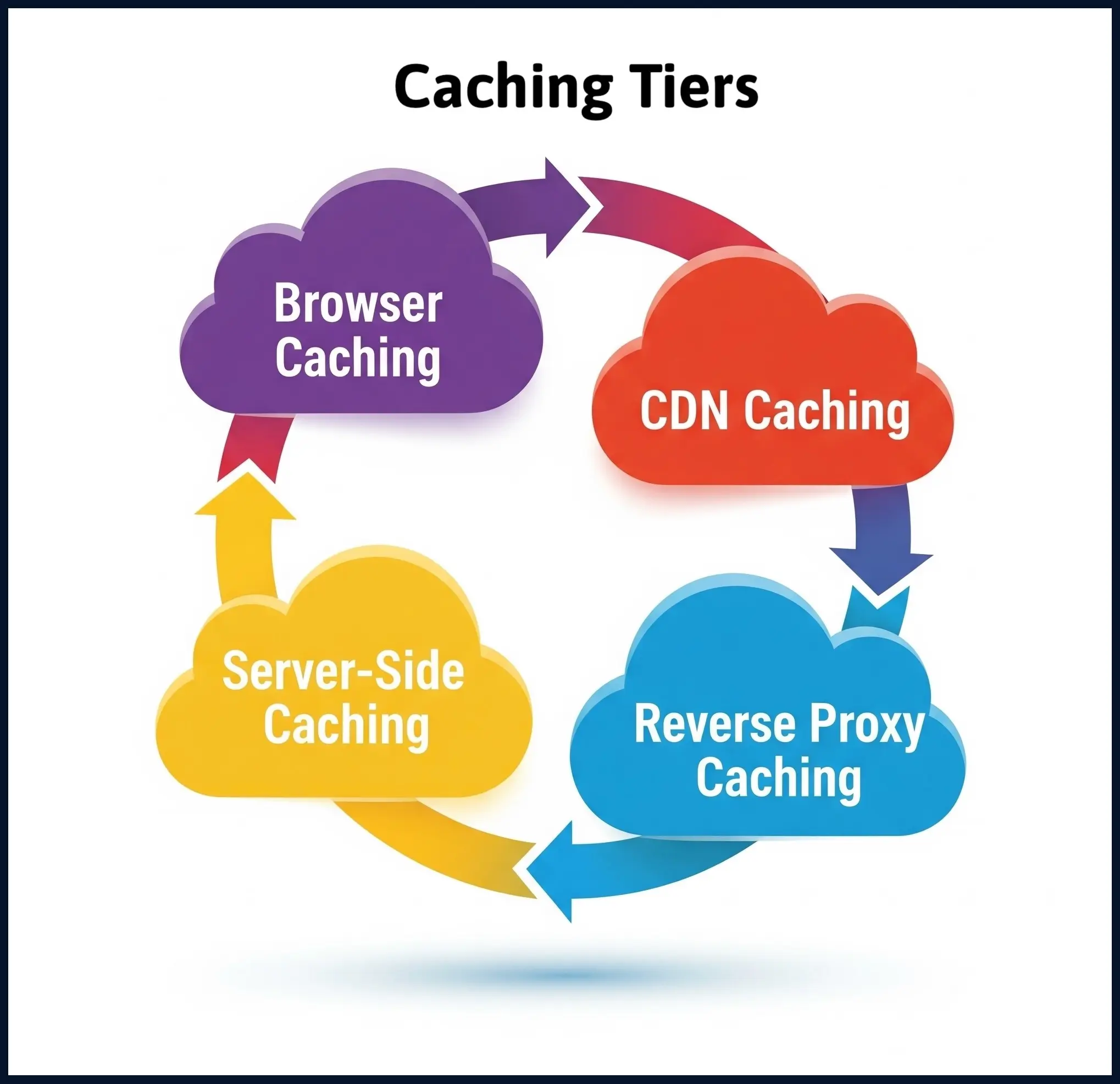

In the relentless pursuit of millisecond-level performance gains, it is important to understand the sophisticated architecture of web application caching in order to see the difference between sluggish applications and lightning-fast user experiences.

Why Caching Architecture Matters

Did you know that every additional 100ms of latency costs Amazon 1% in sales. Google discovered that increasing search results load time by just 400ms reduced daily searches by 0.6%. Google processes an estimated 8.5 billion searches per day – do the math. These aren’t mere statistics—they’re stark reminders that performance optimisation directly impacts revenue, user satisfaction, and competitive advantage.

The modern web application operates in a complex ecosystem where data travels through multiple network hops, database queries execute across distributed systems, and computational processes consume precious CPU cycles. Without a meticulously crafted caching strategy, even the most elegant code becomes a bottleneck in this intricate performance dance.

Layer #1: Browser Caching – The First Line of Defence

Browser caching represents the frontline of your performance optimisation strategy. When properly configured, it eliminates network requests entirely by serving cached resources directly from the user’s local storage.

HTTP Cache Headers: Your Performance Levers

The sophistication of browser caching lies in its granular control mechanisms:

Cache-Control Directives provide precise instructions for resource handling:

max-age=31536000for static assets with versioned filenamesno-cachefor resources requiring validation on each requestprivatefor user-specific content that shouldn’t be cached by shared proxies

E-Tag Headers enable intelligent cache validation, allowing browsers to verify resource freshness without downloading entire files. This conditional request mechanism can reduce bandwidth usage by up to 90% for unchanged resources.

Vary Headers ensure cache segmentation based on request characteristics like encoding, device type, or user agent—critical for responsive designs and internationalisation.

Implementation Strategy

Configure aggressive caching for static assets (CSS, JavaScript, images) with far-future expiration dates, while implementing cache-busting through filename versioning. For dynamic content, leverage short-term caching with validation mechanisms to balance freshness with performance.

Layer #2: Content Delivery Networks – Global Performance Distribution

CDNs transform your application from a single-point-of-failure into a globally distributed performance network. By strategically positioning cached content at edge locations worldwide, CDNs reduce latency through geographical proximity and provide massive scalability headroom.

Edge Caching Sophistication

Modern CDNs offer programmable edge computing capabilities that extend beyond simple static asset caching:

Dynamic content caching with intelligent invalidation policies ensures frequently accessed database-driven content remains available at edge locations while maintaining data consistency.

Adaptive image optimisation automatically serves WebP, AVIF, or optimally compressed JPEG formats based on browser capabilities and network conditions.

Edge-side includes (ESI) enable fragment-based caching where different page components can have independent cache policies, maximising hit rates while preserving dynamic functionality.

Strategic Implementation

Implement tiered caching policies with longer TTLs for stable content and shorter TTLs for dynamic elements. Leverage CDN analytics to identify cache hit ratios and optimise invalidation strategies based on actual usage patterns.

Layer #3: Reverse Proxy Caching – The Application Gateway

Reverse proxies like Nginx, Varnish, or cloud-based solutions serve as intelligent intermediaries between clients and application servers, providing sophisticated caching logic tailored to application-specific requirements.

Advanced Caching Logic

Surrogate Keys enable precise cache invalidation, allowing you to purge related cached objects when underlying data changes. This surgical approach maintains high cache hit rates while ensuring data consistency.

Grace Mode serves stale content when origin servers become unavailable, maintaining application availability during infrastructure failures or maintenance windows.

Edge-side Processing allows reverse proxies to execute custom logic, compress responses, or manipulate headers before serving cached content.

Performance Optimisation Techniques

Configure cache hierarchies where reverse proxies check multiple cache stores (memory, SSD, distributed cache) in order of access speed. Implement request collapsing to prevent cache stampedes when multiple requests for the same uncached resource arrive simultaneously.

Layer #4: Application-Level Caching – Intelligent Data Management

Application-level caching operates closest to your business logic, providing the most granular control over what gets cached, when, and how cache invalidation occurs.

In-Memory Caching Solutions

Redis and Memcached offer sub-millisecond access times for frequently accessed data structures, sessions, and computed results. The choice between them depends on your specific requirements:

Redis provides advanced data structures (sets, sorted sets, hashes) and persistence options, making it ideal for complex caching scenarios and session management.

Memcached offers pure key-value caching with minimal overhead, perfect for simple caching use cases requiring maximum throughput.

Cache-Aside vs. Write-Through Patterns

Cache-aside Pattern provides maximum control by letting your application logic determine what gets cached and when. This approach works well for read-heavy workloads with unpredictable access patterns.

Write-through Caching automatically maintains cache consistency by updating both cache and database simultaneously, ideal for write-heavy applications where data consistency is paramount.

Advanced Strategies

Implement cache warming during application deployment to pre-populate frequently accessed data. Use cache tagging to enable bulk invalidation of related cached objects when business logic changes affect multiple data entities.

Layer #5: Database Query Caching – Optimising Data Access

Database-level caching eliminates the computational overhead of query parsing, optimisation, and execution for frequently accessed data patterns.

Query Result Caching

Most modern databases provide built-in query result caching that stores the results of SELECT statements in memory. MySQL’s query cache, PostgreSQL’s shared buffers, and MongoDB’s WiredTiger cache all serve this purpose with different optimisation characteristics.

Application Query Optimisation

Beyond database-native caching, implement application-level query result caching for expensive analytical queries, aggregations, or complex joins. This approach provides finer control over cache invalidation logic tied to your business rules.

Consider implementing read replicas with eventual consistency for read-heavy workloads, effectively creating a form of data caching through replication.

Orchestrating the Symphony: Integration Strategies

The true power of caching emerges when these layers work in harmonious coordination rather than isolation. Successful implementation requires understanding the interdependencies and optimisation opportunities across the entire stack.

Cache Coherency Management

Implement hierarchical invalidation strategies where changes propagate through cache layers in a coordinated manner. Use cache tags or surrogate keys to enable precise invalidation that maintains performance while ensuring data consistency.

Performance Monitoring and Optimisation

Establish comprehensive monitoring across all caching layers:

- Browser cache hit rates through synthetic monitoring

- CDN performance metrics and cache efficiency ratios

- Application cache hit rates and eviction patterns

- Database query cache effectiveness

Use this data to continuously refine cache policies, identify bottlenecks, and optimise the entire caching ecosystem.

Failure Mode Planning

Design graceful degradation patterns where cache failures don’t cascade into application failures. Implement circuit breakers that detect cache unavailability and route requests directly to origin servers while cache systems recover.

Performance Testing: Validating Your Caching Strategy

Before diving into metrics analysis, establishing a robust testing methodology ensures your caching optimisations deliver measurable improvements. Performance testing tools provide the empirical data necessary to validate theoretical improvements and identify bottlenecks across your caching hierarchy.

Google PageSpeed Insights: Core Web Vitals Analysis

PageSpeed Insights offers invaluable insights into real-world performance impacts of your caching strategy. The tool analyses both laboratory and field data, providing a comprehensive view of how your optimisations affect actual user experiences.

Laboratory Data Analysis:

- Measures Largest Contentful Paint (LCP) improvements from aggressive browser caching policies

- Evaluates First Input Delay (FID) reductions achieved through JavaScript and CSS caching optimisation

- Assesses Cumulative Layout Shift (CLS) stability when implementing resource preloading strategies

Field Data Insights: Chrome User Experience Report (CrUX) data reveals how your caching optimisations perform across diverse network conditions, devices, and geographical locations. This real-world data proves particularly valuable when evaluating CDN effectiveness and mobile performance improvements.

Optimisation Recommendations: PageSpeed Insights automatically identifies caching opportunities, such as:

- Resources lacking proper cache headers

- Oversized images that could benefit from next-generation formats at CDN level

- Render-blocking resources requiring optimised delivery strategies

WebPageTest: Deep Performance Archaeology

WebPageTest provides granular visibility into the performance impact of each caching layer through detailed waterfall charts and advanced testing scenarios.

Multi-Location Testing: Execute tests from various global locations to validate CDN performance and identify geographical performance disparities. This approach reveals whether your edge caching strategy effectively serves international audiences.

Connection Throttling: Test performance across different network conditions (3G, 4G, broadband) to understand how caching strategies perform under constrained bandwidth scenarios. This testing proves particularly crucial for mobile-first optimisation strategies.

Repeat View Analysis: WebPageTest’s repeat view functionality demonstrates the effectiveness of browser caching policies. The performance differential between first and repeat visits quantifies the impact of your cache headers and resource caching strategy.

Advanced Testing Scenarios:

- Single Point of Failure (SPOF) testing evaluates graceful degradation when caching layers become unavailable

- Script injection capabilities allow testing cache warming procedures and invalidation workflows

- Video capture provides visual evidence of loading performance improvements

Synthetic Monitoring Integration

Implement continuous performance monitoring using tools like Pingdom, GTmetrix, or custom synthetic monitoring solutions to track caching performance over time.

Alerting Strategies: Configure alerts for cache hit ratio degradation, TTFB increases, or Core Web Vitals threshold breaches. These early warning systems enable proactive optimisation before user experience degrades.

Trend Analysis: Long-term performance data reveals seasonal patterns, traffic growth impacts, and the ongoing effectiveness of caching strategies as your application evolves.

Measuring Success: Performance Metrics That Matter

Effective caching optimisation requires measuring the right metrics:

Time to First Byte (TTFB) measures server response time improvements from application and database caching optimisations.

Largest Contentful Paint (LCP) reflects the combined impact of browser caching, CDN optimisation, and resource delivery efficiency.

Cache hit ratios across all layers provide insight into caching effectiveness and opportunities for improvement.

Origin server load reduction demonstrates the infrastructure cost savings and scalability improvements achieved through effective caching.

The Path Forward: Continuous Optimisation

Website caching isn’t a set-and-forget optimisation—it’s an ongoing discipline requiring continuous measurement, analysis, and refinement. As your application evolves, user patterns change, and new caching technologies emerge, your caching strategy must adapt accordingly.

The most successful performance engineers treat caching as a dynamic system requiring constant attention and optimisation rather than a static configuration. By understanding the intricate taxonomy of caching layers and their interdependencies, you can unlock performance improvements that directly impact your application’s success metrics.

On the web every millisecond matters, and the sophisticated orchestration of these caching layers is what separates high-performing applications from the rest.